When you ship a meaningful product update, the work isn't done. Users need to understand what changed, why it matters to them, and how to use it. Release notes and changelog entries rarely accomplish this on their own. Most users skip them. Those who do read them often can't translate a feature description into action within their own workflow.

The TL;DR

-

Companies with mature release communication processes experience 60% fewer support tickets after deployments by delivering contextual guidance at the moment users need it.

-

Effective post-release communication uses contextual in-app prompts, smart segmentation by user role and plan, and measurement of adoption rates—not blanket email announcements.

-

Prioritize guidance for features that change existing workflows, require user action, or are critical to your roadmap—skip self-explanatory features or those serving small segments.

-

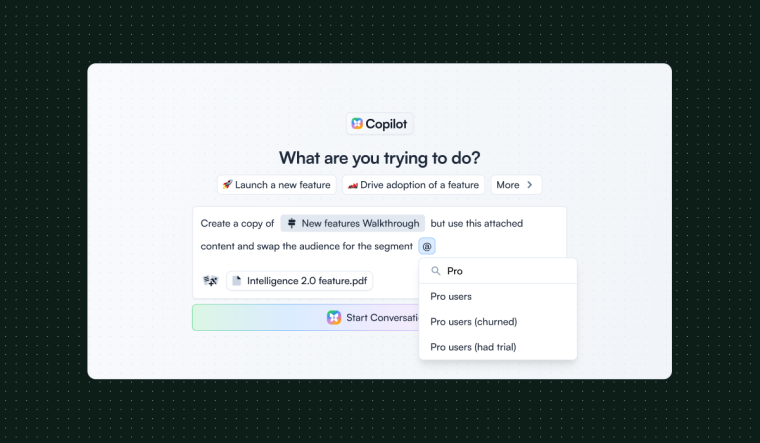

Chameleon enables product and marketing teams to create targeted release guidance without engineering dependencies, segment by user attributes, and measure adoption impact in real-time.

-

Track adoption funnel metrics: exposure → awareness → first use → activation → repeat use. Each stage reveals different optimization opportunities for improving feature adoption.

The result is predictable. Adoption lags. Support tickets spike with "how do I use this?" questions. Customer success teams scramble to explain updates on calls. Sales reps give inconsistent demos of new capabilities. Product and marketing teams end up manually creating one-off enablement materials for each release, which doesn't scale and still leaves most users confused or unaware.

This problem becomes acute as SaaS products mature. Early on, your user base is small and engaged enough that you can communicate changes directly. As you grow, you serve more user segments with different needs, roles, and usage patterns. A feature that's critical for one type of user is irrelevant noise for another. A change that requires immediate action for power users might confuse occasional users who haven't even adopted the previous version of that workflow. Blanket communication strategies stop working. You need a way to deliver the right guidance to the right users at the right moment, and you need to know whether it's actually driving adoption.

Why Release Communication Breaks Down at Scale

The core challenge is timing and relevance. Users encounter new features in different contexts and at different moments. Someone logging in the day after a release has different needs than someone discovering the feature three weeks later while trying to complete a specific task. A user on your enterprise plan who relies on advanced workflows needs different guidance than a free-tier user exploring basic functionality.

Traditional communication channels can't solve this. Email announcements get ignored or forgotten by the time the user needs the feature. In-app banners and modals interrupt workflows and train users to dismiss notifications without reading them. Documentation and help centers require users to leave their task, search for the right article, and translate written instructions back into action within the product. Video tutorials have the same problem with the added step of finding the right timestamp.

The gap widens when you consider internal teams. Customer success managers need to explain updates accurately on calls but often learn about releases at the same time as customers. Sales teams demo new features inconsistently because they lack a standardized walkthrough. Support agents field questions about changes they haven't been trained on. Enablement teams can't keep up with the release cadence, so they prioritize only the biggest launches, leaving smaller but still meaningful updates under-communicated.

But there's a harder problem underneath: you're shipping multiple features per sprint, and you can't create comprehensive guidance for everything. You need a way to decide which releases warrant the investment. Most teams don't have this framework, so they either try to communicate everything (creating noise) or communicate nothing (leaving users confused). Release communication has been treated as a one-time broadcast problem rather than an ongoing adoption problem where you must ruthlessly prioritize.

How Teams Approach Post-Release Feature Adoption

Teams that handle this well use a combination of in-product guidance, targeting, and measurement. The specific implementation varies, but the pattern is consistent: deliver contextual, task-oriented guidance at the moment a user is most likely to need it. Make it easy to skip or revisit. Instrument everything so you can see what's working.

Contextual [in-app guidance](https://www.chameleon.io/blog/in-app-guidance) works best for workflow changes or new UI elements where users need to take specific actions, potentially reducing support tickets by 15-60% according to Apty. This typically means tooltips, coach marks, or short guided tours that appear when a user first encounters the new or changed interface, with three-step tours achieving 72% completion rates. Use this when the feature is self-contained and the value is immediately clear once someone knows it exists. Skip it for conceptually complex features that require deeper understanding, or for changes so minor that guidance feels like interruption.

The key constraint is relevance. If you show the same walkthrough to every user regardless of role, plan, or usage pattern, you create noise. This means you need targeting logic based on plan tier, role, previous behavior, or first-time access to that area of the product, essentially creating user segments that ensure relevance. Building and maintaining this targeting logic is where many teams get stuck. If it requires engineering work for every release, the process doesn't scale.

Persistent "What's new" entry points work best for users who want to stay informed on their own terms and for features that don't require immediate action. This might be a modal that appears once after a release, a side panel accessible from the help menu, or a dedicated section in your product that lists recent updates. Use this when you're shipping multiple updates and need a single place to surface them without interrupting workflows. Skip it if you're only shipping one major feature per quarter, where direct guidance makes more sense.

The constraint is discoverability and prioritization. Users need to know the entry point exists and have a reason to check it. This usually means combining it with a lightweight notification or badge that appears after a release, then disappears once the user has seen it. You also need a way to prioritize which updates get featured and for how long. If every minor update gets equal treatment, users can't identify what actually matters to them.

Controlled rollout combined with measurement works best when you're unsure whether your guidance will be helpful or when you're testing different messaging approaches. Release guidance to a small cohort first, measure adoption and support impact, then iterate before expanding. Use this for features where adoption is critical to your roadmap or where you're changing established workflows. Skip it for low-stakes releases where the cost of getting it wrong is minimal.

The constraint is infrastructure. You need feature flags, cohort targeting, and analytics all working together. Most teams don't have this, which is why controlled rollout often isn't an option even when it would be the right approach. If you don't have this infrastructure, you're choosing between shipping guidance to everyone at once or not shipping it at all.

Internal enablement in parallel works best when customer success, support, or sales are primary channels for explaining updates, especially for enterprise customers. Give these teams access to the same walkthroughs users see, or create internal-only versions they can use for training and demos. Use this when your product requires hands-on support or when you have a high-touch sales model. Skip it if your product is self-serve and internal teams rarely explain features directly.

The constraint is consistency and access. Internal teams need to preview and practice with the guidance before customers see it, but they also need to know when it changes. If guidance is updated based on user feedback, internal teams need to be notified so they don't continue explaining the old version. This creates a maintenance burden that many teams underestimate.

Deciding Which Releases Get Guidance

Not every release warrants the investment in guidance. You need a framework for deciding where to spend your time.

Create guidance when the feature changes an existing workflow that users rely on daily. These changes break muscle memory and generate support tickets if not explained. Also create guidance when the feature requires specific actions to unlock value, especially if those actions aren't obvious from the UI alone. Finally, create guidance when adoption of this feature is critical to your roadmap because other planned features depend on it.

Skip guidance when the feature is self-explanatory from the UI. Also skip it when the feature serves a small segment and you can enable them directly through customer success. Skip it when you're still iterating rapidly and the feature will likely change again in the next sprint. And skip it when adoption isn't actually important. Some features exist to serve edge cases or specific customer requests. Low adoption is fine.

The ROI threshold is roughly this: if creating and maintaining guidance takes more time than the support tickets and enablement calls you'd otherwise handle, don't do it. If you're shipping 10 features per quarter, you might create guidance for 2-3 of them. The rest get a changelog entry and nothing more.

Patterns From Teams That Do This Well

Teams that successfully scale post-release communication share a few operational patterns, though few teams have all of these in place.

They treat release guidance as a repeatable process, not a one-off project. They have templates for common patterns like "new feature introduction," "workflow change," or "UI update." This reduces the time to create guidance for each release. They assign clear ownership, usually to product marketing or product management. That owner has the authority to publish guidance without waiting for engineering, though in practice this often requires negotiation with eng leadership about what can be changed without a deploy.

They build targeting logic into their guidance from the start. Instead of showing the same walkthrough to every user, they define which segments should see it. Targeting criteria include role, plan, behavior, or feature access. This requires upfront work to define segments and maintain them as the product evolves. But it prevents the noise problem that makes users tune out all guidance.

They measure outcomes, not just engagement. Completion rate for a walkthrough is less important than whether users who saw it actually adopted the feature. They track metrics like time to first use and percentage of eligible users who tried the feature within a week. They also monitor changes in support ticket volume for that feature. This assumes you have instrumentation in place to track feature usage, which many teams don't. If you can't measure adoption, you're limited to proxy metrics like guidance completion rate and support ticket volume.

They create feedback loops. Users can dismiss guidance or mark it as unhelpful, and that signal goes back to the team creating it. If a walkthrough has a high dismiss rate, the team investigates. Is it poorly timed? Irrelevant to that user segment? Unclear? They don't assume the first version will be right.

They also manage notification fatigue explicitly. Every team wants to use in-app guidance for their releases. Without a gatekeeper, your product becomes a parade of tooltips. Someone needs the authority to say no, this release doesn't get guidance. This is often the hardest organizational problem to solve.

Cross-Functional Coordination and Maintenance

The operational challenge isn't just creating guidance. It's coordinating across teams and maintaining it over time.

Someone needs to write the guidance copy. This is usually product marketing, but they often don't have capacity or don't understand the feature deeply enough. Product managers understand the feature but aren't trained in writing user-facing copy. This creates a review bottleneck. You need a clear process for who drafts, who reviews, and who has final approval. Without this, guidance gets delayed or ships with copy that doesn't match what engineering actually built.

Then there's the versioning problem. If you have users on different versions (mobile, web, enterprise on-prem), you can't show the same guidance to everyone. Some users don't have the feature yet. Some have an older version. You need targeting logic that accounts for version, which requires coordination between product, engineering, and whoever manages the guidance. Most teams don't have this coordination in place, so they either show guidance to everyone (confusing users who don't have the feature) or delay guidance until all versions are updated (missing the adoption window).

There's also guidance debt. You ship guidance for a feature, then the feature changes in the next sprint. Now your guidance is wrong. This happens constantly in fast-moving products. You need a process for updating or deprecating guidance, or it becomes a second documentation problem. Many teams don't account for this maintenance burden when deciding to invest in guidance.

Finally, there are organizational dynamics. Sales and customer success teams sometimes resist in-app guidance because it reduces their perceived value to customers. If users can learn features on their own, why do they need a CSM? This isn't always explicit, but it affects adoption of guidance systems. You need to position in-app guidance as enabling internal teams to focus on higher-value conversations, not replacing them. This requires buy-in from leadership, not just product.

Where Chameleon Fits

Chameleon is built for teams that release frequently enough that manual guidance creation doesn't scale, and who need sophisticated targeting so different user segments see relevant updates without noise. It works best when you have clear ownership of release communication (usually product marketing or product management) and want to iterate quickly based on adoption data. If you're only shipping a few major releases per year, or if your product changes require deep conceptual education rather than in-context guidance, you probably don't need a dedicated tool yet.

The Build vs. Buy Decision

The question isn't whether to use a tool, but when building in-house makes sense versus buying.

Build in-house when you have specific targeting or measurement requirements that off-the-shelf tools can't meet, when you have engineering capacity to build and maintain the system, when the cost of a tool (usually $10K-$50K+ annually depending on user volume) is significant relative to your budget, or when you need deep integration with proprietary systems that third-party tools can't access.

Buy a tool when you need to move quickly and don't have engineering capacity to build, when you're testing whether systematic guidance actually improves adoption before committing to a larger investment, when you need non-technical teams to create and publish guidance without engineering dependencies, or when you want to avoid the ongoing maintenance burden of a homegrown system.

Most teams overestimate their ability to build and maintain this in-house. The initial build isn't hard. The ongoing maintenance, iteration, and cross-functional coordination is where homegrown systems break down. If you're not sure, start with a tool. You can always build later if you outgrow it.

When This Approach Isn't the Right Solution

This approach doesn't make sense if your releases are infrequent or minor enough that manual communication works fine. If you ship one or two meaningful updates per quarter, the overhead of building a scalable guidance process isn't worth it. The same is true if your user base is small or homogeneous. You're better off with direct communication and manual enablement.

It also doesn't work if your product changes are so complex that lightweight in-app guidance can't convey what users need to know. Sometimes adopting a new feature requires understanding new concepts, changing established workflows, or coordinating with other team members. In those cases, a tooltip or short tour won't be sufficient. You need deeper education through live training, detailed documentation, or hands-on onboarding with a customer success manager.

This approach assumes you can measure adoption and iterate based on data. If you don't have analytics instrumentation, you won't know whether your efforts are working. The same is true if you can't connect guidance to feature usage. You'll be creating guidance based on assumptions rather than evidence.

Finally, this approach doesn't solve the problem of users who don't log in regularly or who use only a small part of your product. If a user logs in once a month, they'll miss most of your release guidance. If they use only one workflow, guidance about other features is irrelevant. For these users, you still need email, in-app notifications, or direct outreach from customer success.

Thinking Through Your Next Steps

Start by assessing whether this problem is actually costing you. Look at support ticket volume after recent releases. Ask your customer success team how much time they spend explaining new features on calls. Companies with mature release processes experience 60% fewer support tickets after deployments. Check adoption rates for your last few releases. See how long it takes users to try new features and whether most users ever adopt them at all. If the cost is low, you probably don't need a systematic solution yet.

If the cost is real, identify which releases would benefit most from better guidance. Not every release needs a walkthrough. Focus on features that change existing workflows, require user action, or have historically caused confusion. Pick one upcoming release as a test case.

For that release, define what success looks like. Is it adoption rate within the first week? Reduction in support tickets? Time to first successful use? Pick metrics you can actually measure with your current analytics setup. If your analytics setup is insufficient, you have two options: use proxy metrics like support ticket volume and guidance completion rate, or invest in better instrumentation first. If you can't measure anything, skip systematic guidance entirely. You're better off with manual enablement where you can at least get qualitative feedback.

Then decide who will own creating and publishing the guidance. This is usually product marketing, product management, or a dedicated product education role if you have one. Most teams don't. If you don't have clear ownership, assign it to whoever is currently creating release materials. Make sure they have the authority and tools to publish without waiting for engineering. If they don't, accept that you'll need engineering time for each release, which limits how often you can do this.

Create the guidance using whatever tools you have now. This might be a simple modal with a link to documentation, a short video embedded in your help center, or a manually triggered tooltip. The goal is to test whether guidance at the right moment actually improves adoption. You're not trying to build the perfect system.

Measure the results and talk to users. Did adoption improve? Did support tickets decrease? Did users find the guidance helpful or annoying? Use this to decide whether investing in a more scalable process is worth it.

If the test works and you're doing this frequently enough that the manual process is painful, then explore whether a dedicated tool makes sense. Focus on tools that let your team move quickly and iterate based on data. The goal is to make release communication repeatable and effective, not to add complexity.

Boost Product-Led Growth 🚀

Convert users with targeted experiences, built without engineering