Most SaaS product teams eventually face the same question: should we build our own in-app onboarding system, or buy a dedicated tool? The decision matters because onboarding directly affects activation, adoption, and support load, but the infrastructure to support it is more complex than it first appears. What starts as "we just need a few tooltips" quickly expands into segmentation logic, experimentation workflows, analytics instrumentation, versioning, governance, and ongoing maintenance as your product and UI evolve.

The TL;DR

-

Building custom onboarding infrastructure requires 3-6 months of engineering time initially, plus ongoing maintenance that can consume 20-30% of a developer's time as your product evolves.

-

Buying a dedicated onboarding platform like Chameleon eliminates engineering bottlenecks, enables non-technical teams to iterate quickly, and typically costs $10K-$50K annually—often less than the opportunity cost of engineering time.

-

The decision framework: Build if you have 50+ engineers and unique compliance requirements. Buy if you need fast iteration, have constrained engineering resources, or want to avoid maintenance burden.

-

Hidden costs of building include selector breakage when UI changes, targeting logic complexity, analytics drift, versioning needs, and knowledge transfer risk when team members leave.

-

Chameleon provides visual editing, event-based targeting, A/B testing, and analytics integration—capabilities that would take months to build internally but are available immediately with a dedicated tool.

This page walks through the operational realities of each approach, the hidden costs that emerge over time, and how to decide what makes sense for your team right now.

Why this problem shows up as SaaS teams scale

Early-stage products often hardcode onboarding into the application itself. A few static modals, maybe a checklist in the UI. This works when onboarding is simple, your product changes infrequently, and you have engineering capacity to update flows with each release.

The problem surfaces when onboarding needs to change faster than your release cycle allows. Product teams want to test different messaging, show different flows to different user segments, iterate based on activation data, and respond quickly to user confusion or drop-off. But every change requires engineering work: updating code, testing across browsers and devices, coordinating deploys, waiting for mobile app releases to clear review.

At the same time, the scope of what "onboarding infrastructure" actually means expands. You need targeting rules so different user types see different experiences. You need frequency capping so users aren't shown the same tour repeatedly. You need analytics to measure completion and correlate onboarding with activation. You need versioning so you can roll back broken flows. You need permissions and audit logs for compliance. You need localization and accessibility support. You need a way for non-engineers to preview and edit content without touching code.

What looked like a few weeks of engineering work becomes an ongoing maintenance burden. Selectors break when the UI changes. Targeting logic becomes tangled. Analytics events drift out of sync. The team that built it moves on, and nobody wants to own it. Meanwhile, the product and growth teams are blocked from running the onboarding experiments they need to improve activation.

This is when teams start evaluating whether to invest more deeply in a custom system or adopt a dedicated tool.

Approach one: Build a lightweight custom system and accept release-bound iteration

Some teams build a minimal onboarding layer into their application. This usually means a component library for modals, tooltips, and checklists, plus some basic logic for when to show them. Content and targeting rules live in code or configuration files. Changes go through the normal development and release process.

This approach works well when onboarding is relatively stable, you have a small number of flows that don't change often, and your team is comfortable with onboarding being tied to your release cadence. It also works if onboarding is highly integrated with product functionality in ways that would be awkward to decouple. For example, if onboarding steps trigger backend state changes or need to read complex application state.

The main advantage is control. You own the code, the data model, and the integration points. You can customize exactly how onboarding behaves and fits into your application architecture. There's no third-party dependency, no additional vendor to manage, and no per-seat or usage-based pricing to worry about.

The breakdown happens when iteration speed becomes critical. If you need to run weekly onboarding experiments, test different messaging for different segments, or respond quickly to user feedback, the release-bound model creates too much friction. Engineering becomes a bottleneck for every change. Product and growth teams can't move independently. Experimentation slows down, and you lose the ability to learn and improve quickly.

The other breakdown point is maintenance. As your product UI evolves, onboarding code needs constant updates. Selectors break, step sequences become outdated, edge cases multiply. If the team that built the system moves on, the knowledge and ownership often disappear with them. What was supposed to be a lightweight solution becomes technical debt that nobody wants to touch.

Approach two: Build a full onboarding platform with editor, targeting, and experimentation

Some teams decide to build a complete onboarding platform internally. This means an authoring interface where non-engineers can create and edit flows, a targeting and segmentation engine, experimentation and A/B testing capabilities, analytics instrumentation, versioning and rollback, permissions and governance, and often a preview or staging environment.

This approach makes sense when onboarding is a core strategic differentiator for your product, you have significant engineering resources to dedicate to it, and you expect to maintain and evolve the system over multiple years. It's most common at larger companies where onboarding requirements are highly specific, compliance and data residency needs are complex, or the product itself is an onboarding or workflow tool where building this infrastructure aligns with core competencies.

The advantage is complete customization and control. You can build exactly the workflows, targeting logic, and integrations you need. You own the data pipeline and can instrument events precisely how you want. You can integrate deeply with your product's state and backend systems in ways that would be difficult with a third-party tool.

The cost is significant and ongoing. Building a usable authoring interface alone typically takes one engineer three to six months, or a small team six to twelve weeks. Adding segmentation, experimentation, versioning, and governance often consumes a full engineering team for a year or more. As a rough benchmark, teams smaller than 50 engineers rarely have the capacity to build and maintain this successfully. The ongoing maintenance burden is real: keeping the system working as your product evolves, adding new capabilities as requirements grow, supporting new platforms or frameworks, handling security and compliance updates, and training new team members.

To put this in perspective: two engineers spending six months on this represents roughly $150K to $300K in fully-loaded cost, depending on your market. Most dedicated tools cost $10K to $50K annually for mid-sized teams. The build option only makes economic sense if you're confident the system will serve you for many years without major rewrites, and if those engineers wouldn't deliver more value working on core product features.

The hidden cost is opportunity cost. The engineering time spent building and maintaining onboarding infrastructure is time not spent on core product features, performance improvements, or other growth initiatives. For most SaaS companies, onboarding infrastructure is not a competitive advantage. It's table stakes. The question is whether building it internally is the best use of scarce engineering resources.

This approach breaks down when the maintenance burden exceeds expectations, when the team that built it leaves and knowledge transfer doesn't happen, or when the business realizes the opportunity cost is too high. It also struggles when requirements evolve faster than the internal platform can adapt, for example when you need to support new platforms, add localization, or meet new compliance requirements.

Approach three: Use a dedicated in-app onboarding or product adoption tool

Many teams adopt a specialized tool designed specifically for in-app onboarding and product adoption. These tools provide a visual editor for creating tours, tooltips, checklists, and other onboarding patterns, along with targeting and segmentation, experimentation and A/B testing, analytics and event tracking, versioning and rollback, and governance features like permissions and audit logs.

This approach works well when you need fast iteration without engineering dependency, when onboarding changes frequently based on experiments or user feedback, when you need to support multiple user segments with different experiences, and when you want to avoid the long-term maintenance burden of a custom system.

The reality of implementation is messier than vendor demos suggest. You still need engineering to install the JavaScript snippet and handle the initial integration. The promise of "no code changes" is aspirational. Custom event tracking, deep product integrations, and complex targeting rules still require developer work. You'll also need to navigate the organizational question of who owns the tool. Is it Product? Growth? Marketing? This matters because it determines who can make changes, who's responsible for results, and who gets blamed when something breaks.

The workflow typically involves product or growth team members using a visual editor to create or modify onboarding flows, setting targeting rules based on user attributes or behavior, previewing changes in a staging environment, then publishing to production. The advantage is that routine changes don't require engineering cycles or deploys, though significant changes to tracking or integration points still do.

The main advantage is speed and independence for most onboarding work. Non-engineers can create and iterate on standard flows without waiting for engineering cycles. Changes go live immediately, not tied to release schedules. Experimentation becomes faster and lower-friction. The tool vendor handles maintenance, updates, security, and compliance. Your engineering team can focus on core product work.

The trade-offs are real. You're adding another JavaScript bundle to your application, which means page load impact and performance budget concerns that engineering will raise. You're constrained by what the tool supports, though most mature tools cover common onboarding patterns. You're also adding another service to your stack, with associated costs, security review, procurement cycles, SSO setup, and annual renewal negotiations. For teams already managing ten or more SaaS tools, this organizational overhead can tip the decision toward building, even when buying makes technical sense.

You're also creating a data dependency. User behavior data flows through the vendor's system. This raises questions about data ownership, GDPR compliance, data processing agreements, and whether you're creating vendor lock-in. If you decide to switch tools after two years of behavioral data accumulation, migration can be painful and historical data may be lost.

This approach breaks down in a few scenarios. Your onboarding needs might be so specific or deeply integrated with product logic that a general-purpose tool can't support them. Data residency or compliance requirements might prevent using a third-party service. Or onboarding might be stable and infrequent enough that the cost and complexity of a dedicated tool isn't justified.

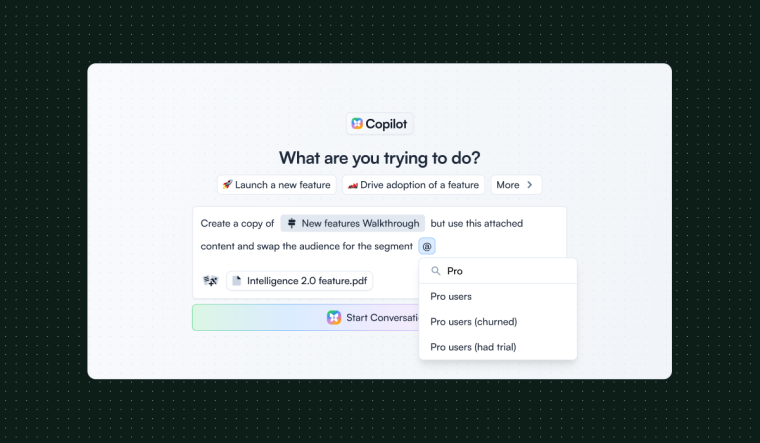

Where Chameleon fits: Chameleon works well for product-led SaaS teams that need to iterate quickly on onboarding without engineering bottlenecks, especially when you're running frequent experiments or supporting multiple user segments. It's designed for teams that care about UX quality and want onboarding that feels native to their product, not like a generic overlay. That said, if your onboarding is deeply integrated with backend logic, changes infrequently, or you have unique compliance requirements that prevent third-party tools, building internally may make more sense. Book a demo to see if it fits your workflow.

The mobile app problem

For SaaS products with mobile apps, this decision becomes significantly more complex. Web-based onboarding tools don't work in native mobile apps. You're looking at either native SDKs from the vendor (which often lag behind web features), building parallel onboarding systems for web and mobile, or accepting that mobile onboarding will remain release-bound even if web becomes more flexible.

Mobile release cycles are slower. Changes require app store review, which means even with a tool, you can't iterate as quickly on mobile as you can on web. Users also don't update apps immediately, so you're supporting multiple versions simultaneously. This often means teams end up with hybrid approaches by necessity: a tool for web, custom code for mobile, and constant work to keep experiences roughly consistent across platforms.

If your product has significant mobile usage, factor this into your decision. The "buy" option may only solve half your problem, and the "build" option needs to account for native mobile development in addition to web infrastructure.

Where a dedicated tool fits and what it doesn't replace

A dedicated in-app onboarding tool sits between your product and your users, providing a layer for creating and managing onboarding experiences without modifying application code. It typically works by injecting a JavaScript snippet into your application, which then renders onboarding UI elements based on rules and content managed in the tool's platform.

Teams that benefit most from this approach are those running frequent onboarding experiments and supporting multiple user segments with different needs. It also works well for teams with constrained engineering resources who need to iterate quickly based on activation or adoption data. It's particularly valuable for product-led growth companies (which grow ~50% faster than traditional sales-led ones) where onboarding directly impacts conversion and expansion, and for teams that have already tried building custom onboarding and found the maintenance burden unsustainable.

These tools aren't meant to replace your product analytics platform, your customer data infrastructure, or your core application logic. They're designed to complement your existing stack by handling the specific problem of in-app onboarding and guidance. Most integrate with analytics tools like Segment, Amplitude, or Mixpanel rather than trying to replace them. They also don't replace onboarding that happens outside your product, like email sequences, sales-assisted onboarding, or human-led training.

When evaluating tools in this category, look at how they handle your specific technical constraints: performance and bundle size impact, integration depth with your existing analytics stack, flexibility in targeting and segmentation logic, support for your platforms (web, mobile, desktop), data residency and compliance requirements, and pricing model (per-seat, usage-based, or flat fee). Also consider the less obvious factors: quality of customer support, frequency of product updates, and whether the vendor's roadmap aligns with where your product is heading.

Tools in this category include Appcues, Pendo, WalkMe, Chameleon, and Userpilot, each with different strengths around use cases, company size, or platform support. The choice between them usually comes down to specific workflow needs, integration requirements, and how the tool fits into your existing stack.

Approach four: Hybrid model with lightweight tooling and selective custom builds

Some teams take a middle path: use a dedicated tool for most onboarding needs, but build custom components for specific high-value or highly integrated flows. This lets you move quickly on standard onboarding while maintaining control over the experiences that truly differentiate your product.

For example, you might use a tool for general feature tours, tooltips, and checklists, but build a custom interactive tutorial for your product's core workflow, or a specialized setup wizard that needs deep integration with your backend systems.

This approach works when you have clear boundaries between standard onboarding and strategic custom experiences, when your engineering team is comfortable integrating multiple systems, and when you want to optimize for both speed and control.

The advantage is flexibility. You get fast iteration for most onboarding while keeping full control over the experiences that matter most. You also reduce the scope of what you need to build and maintain internally.

The challenge is managing the boundary between the two systems. You need clear ownership and decision criteria for what goes where. You need to ensure consistent user experience across both systems. And you need to avoid the trap of gradually rebuilding the tool's functionality internally because "we need just one more custom thing."

In practice, this pattern is more common than teams admit. Many start with a tool, then gradually build custom components alongside it, and eventually end up with two half-baked systems. Neither is fully maintained, the user experience becomes inconsistent, and the team spends more time managing the boundary than they would have spent on either approach alone. If you choose this path, be disciplined about the boundary and honest about whether you're actually getting the benefits of both approaches or just the overhead.

This approach breaks down when the boundary becomes unclear or constantly shifts, when maintaining two systems creates more overhead than it saves, or when the team lacks the discipline to resist scope creep on the custom side.

Patterns from teams that successfully improve onboarding infrastructure

Teams that successfully solve this problem tend to follow a few common patterns.

They start by clearly defining what success looks like. Not just "better onboarding," but specific activation metrics, time-to-value targets, or support ticket reduction goals. This helps them evaluate whether the investment in infrastructure is actually moving the metrics that matter. The challenge is that "clearly defining success" often means navigating conflicting stakeholder metrics. Product cares about activation, Growth cares about conversion, Support cares about ticket volume, and Engineering cares about maintenance burden. Getting alignment on which metric actually matters takes longer than building the first version of the infrastructure.

They separate the decision about what to build from the decision about how to build it. First, they validate that changing onboarding actually improves activation. They might do this with manual outreach, Loom videos, or even hardcoded flows in the product. Only after proving the value do they invest in infrastructure to scale it.

They're realistic about engineering capacity and opportunity cost. They calculate the initial build time and the ongoing maintenance burden, then compare that to what else the engineering team could be working on. They also factor in the cost of slow iteration. If running an onboarding experiment takes two weeks instead of two hours, how much learning are you losing?

They think about ownership and knowledge transfer. Who will maintain this system in two years? What happens when the person who built it leaves? How do you onboard new team members? Custom systems often fail not because they're technically inadequate, but because organizational knowledge and ownership disappear. With third-party tools, the ownership question is different but still real: does Product own it or Growth? Who has admin access? Who's responsible when something breaks? These questions need answers before you buy, not after.

They account for analytics instrumentation debt. Whether you build or buy, tracking events accumulate over time. Event names become inconsistent, properties drift, and nobody remembers what "onboarding_step_completed_v2_final" actually means. This isn't unique to onboarding infrastructure, but it's worse here because onboarding touches so many parts of the product. Teams that succeed plan for this from the start with naming conventions, documentation, and regular cleanup.

They're honest about what's truly differentiated. Most onboarding patterns (tours, tooltips, checklists, segmentation, experimentation) are not competitive advantages. They're necessary capabilities, but building them internally doesn't make your product better. The question is whether you can get those capabilities faster and cheaper by buying them.

They also recognize when building makes sense. If onboarding is deeply integrated with your product's core functionality, if you have unique compliance or data residency requirements, or if you're a large company with dedicated platform teams, building internally might be the right choice. The key is making that decision deliberately, with full awareness of the costs.

When this is not the right problem to solve

Not every team needs sophisticated onboarding infrastructure. If your product is simple enough that users understand it immediately, if your onboarding is stable and rarely changes, or if you're still in early product-market fit stages where onboarding is the least of your problems, investing in infrastructure is premature.

Similarly, if your activation problem isn't actually about onboarding, if it's about product value, pricing, or market fit, better onboarding infrastructure won't help. You need to validate that onboarding changes actually move your activation metrics before investing in the ability to iterate on them quickly.

This is also not the right focus if you don't have the organizational capacity to act on what you learn. If you can't run experiments, analyze results, and iterate based on data, faster onboarding infrastructure won't deliver the improvements you're hoping for.

Finally, if your engineering team is already underwater and you're considering building a custom system, be very careful. The maintenance burden is real and ongoing. If you can't commit to maintaining and evolving the system over multiple years, you're likely creating technical debt that will eventually force a painful migration.

Thinking through your next steps

Start by understanding your current state. How often does your onboarding change today? How long does it take to ship a change? Who's blocked by the current process? What experiments do you want to run but can't because of infrastructure limitations?

Then look at your activation data. Do you know which onboarding experiences correlate with activation? Do you have hypotheses about what to test? If you don't have clear ideas about what to change, infrastructure won't help. You need to do the research and strategy work first.

Calculate the real cost of your options. For building, estimate not just initial development time but ongoing maintenance, opportunity cost, and knowledge transfer risk. For buying, look at licensing costs, implementation time, and how the tool fits into your existing stack. Be realistic about what your team can actually maintain. Factor in the organizational overhead: procurement cycles, security reviews, SSO setup, training, and annual renewals.

Use this simple framework to guide your decision:

Build if: You have 50+ engineers, onboarding is a core product differentiator, you have unique compliance requirements that prevent third-party tools, or you're confident you can maintain the system for 3+ years.

Buy if: You need to run frequent experiments, your engineering team is constrained, onboarding patterns are standard (tours, tooltips, checklists), or you've already tried building and found the maintenance burden unsustainable.

Wait if: You're pre-product-market fit, onboarding changes rarely, you don't have clear hypotheses to test, or your activation problem isn't actually about onboarding.

When evaluating vendors, ask specific questions: How do you handle mobile apps? What happens to our data if we leave? What's your page load impact? How do custom events work? Can we export historical data? What does your API allow us to build? How often do you ship updates? What happens when your service goes down?

Consider running a small experiment. If you're leaning toward a dedicated tool, most offer trials or pilot programs. Pick one high-value onboarding flow and see if you can improve it faster with the tool than you could with your current process. If you're leaning toward building, scope out a minimal version and see if you can ship it and maintain it without it becoming a burden.

Talk to teams at similar companies who've made this decision. Ask them not just what they chose, but how it's working two years later. Specifically ask: What surprised you about the maintenance burden? How did ownership evolve? What would you do differently? Did you end up with a hybrid system even if you didn't plan for one? How do you handle mobile? What happened when the person who built it left?

Most importantly, make the decision deliberately. A common failure mode is drifting into a custom build without fully understanding the commitment, or adopting a tool without clear goals for how it will improve your metrics. Either approach can work. For most teams, a dedicated tool will deliver faster results with less ongoing burden, but only if you're honest about your constraints, your capacity, and what you're actually trying to achieve.

Boost Product-Led Growth 🚀

Convert users with targeted experiences, built without engineering