You wouldn't ship a feature without experimenting. Yet that's exactly what you're doing with your Interactive Demos. The irony? That Demo is often the first time users truly understand your feature. It's their "aha" moment. And you're not testing it? 🥴

But creating the Demo is only half the battle. The other half? Testing what actually drives adoption.

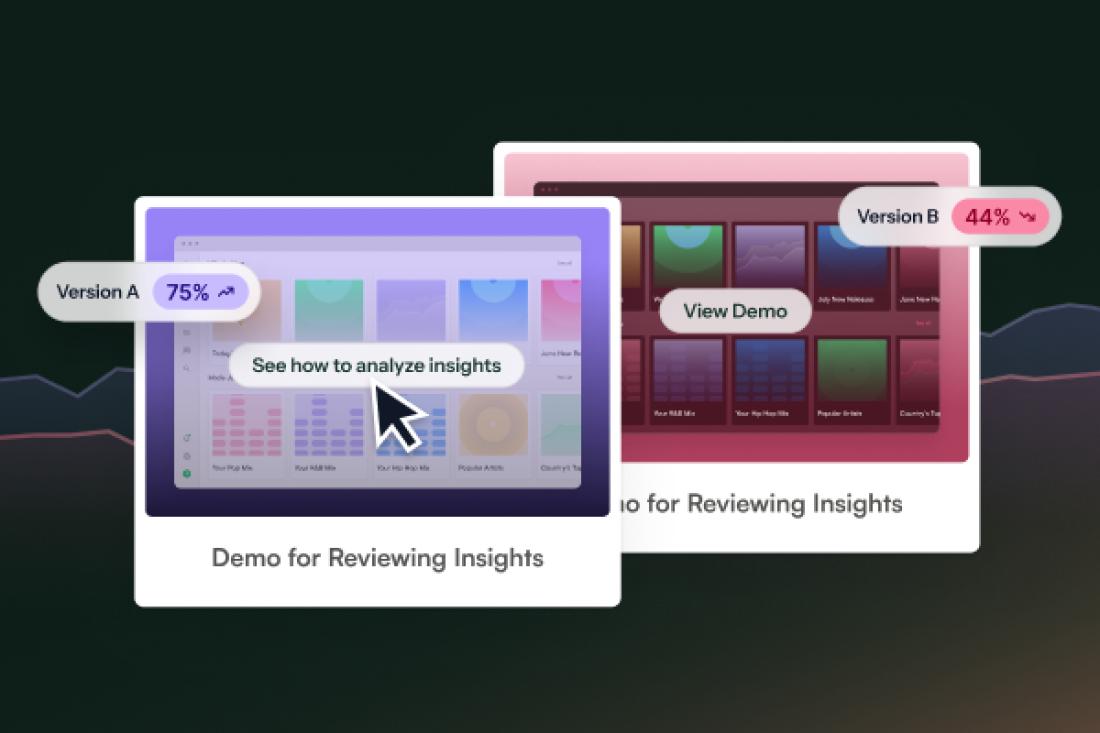

One title change can increase feature adoption by 50%. One structural test can double your activation rate. And with modern interactive demo tools, you can run these tests while measuring actual feature adoption, not just demo views.

So, let's explore how to A/B test your interactive demos, because every day you're not testing is a day you're choosing to guess about what drives adoption.

We are seeing many people learn about functionality from multiple different channels, so it's really valuable to create an Interactive Demo every time you ship a new small feature or big feature – and test it

The Product Demo Paradox: Your "Perfect" Demo Might Be Killing Adoption

You've probably spent weeks crafting what you think is the perfect Demo for your latest feature launch. You've mapped it to your user journey. You've aligned it with your activation metrics. Your product team consensus'd the hell out of it.

And it's probably wrong. Not because you're bad at this (you're not). But because you're shipping your best guess instead of your best performer.

Think about it: You A/B test your onboarding flows religiously. You've run experiments on every product tour, every empty state, every first-run experience. But the moment someone clicks into your Demo – the moment they're actually learning about your feature – you're suddenly cool with one-size-fits-all?

That's like optimizing every part of your activation funnel except where users actually understand what your product does. Wild, right?

An Interactive Demo is like a conversion formula through every step, so if you reduce friction, more people are likely to complete it.

Reducing friction isn't about dialing down your product story. It's about finding that sweet spot between comprehensive feature education and respecting your users' time. And spoiler alert: that sweet spot is different for every feature, every user segment, every stage of the user journey.

The Real Cost to Your Product Metrics (Time to Get Uncomfortable)

Let's talk about what this actually means for your activation and adoption metrics.

Say you're launching a new feature. You're driving 1,000 users to your Demo monthly through in-app announcements, help docs, and email campaigns. Your current Demo leads to a 12% feature adoption rate. Not terrible.

But what if a simple title change could bump that to 18%? That's 60 extra activated users – a 50% increase in feature adoption from changing a few words. Over a quarter? That's 180 users who could have been getting value from your feature, but didn't because you didn't test.

Now multiply that by every feature launch. Every product update. Every onboarding flow.

Starting to impact your quarterly product metrics? Yeah, thought so.

What Actually Drives Adoption: 5 Demo Elements You Need to Test

After analyzing thousands of Demo interactions and their downstream impact on product metrics, here are the elements that consistently make or break feature adoption:

1. The Title Screen: Setting Activation Expectations

Fast impressions really matter. When users see the title slide or your Demo embedded somewhere, they'll decide: Is it worth clicking through and diving into this Demo?

For product teams, this is about activation promise. Test "See how to reduce churn by 30%" against "Customer retention dashboard walkthrough." One promises an outcome, the other describes a feature. Guess which one drives better adoption?

Pro tip: Your title should map directly to the user problem you're solving, not the feature you built. Test both and watch how it impacts your time-to-first-value metrics.

2. Demo Length: The Activation vs. Education Tradeoff

Everyone says shorter is better. Your product analytics probably say otherwise.

Pulkit recommends keeping Demos to less than 10 steps, but here's what we've learned from actual adoption data: Users who complete 8-step comprehensive Demos have 2x higher 30-day retention than those who complete 4-step "quick wins" Demos.

Why? Because proper feature education drives confident usage. Test both approaches, but measure success by 30-day feature retention, not Demo completion rates.

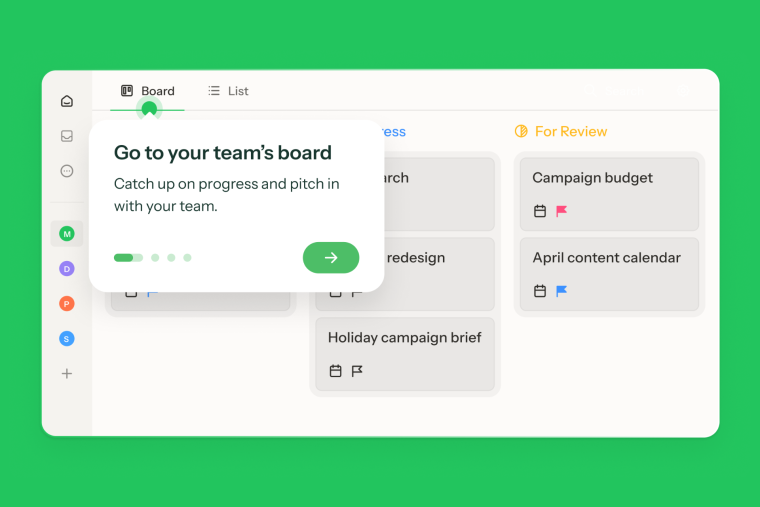

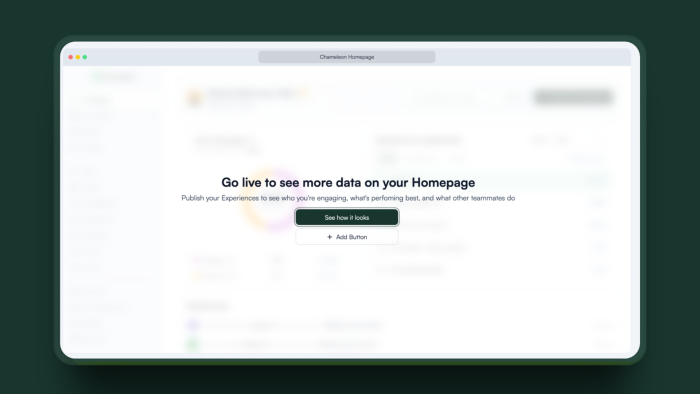

3. Progressive CTAs: Mapping to Your Activation Funnel

The CTA copy in your Interactive Demo is the moment when someone decides to take action or not, make it count

For product teams, this isn't just about click-through rates—it's about mapping CTAs to your activation funnel. Test progressive commitment: "See how it works" → "Try with sample data" → "Set up for my team."

Each CTA should move users one step closer to your activation event. Test different progressions and measure which path has the highest activation completion rate.

4. Feature Branching: Segmentation Without the Complexity

This is where teams can get smart about personalization without complex targeting rules.

"You can offer them multiple options," Agrawal explains. "Say, hey, what feature are you most interested in? And you can have three CTAs leading to different steps in the Demo."

For a PM, this is gold. You can create paths for different use cases without maintaining multiple Demos. Test offering branches for "New to [feature]" vs. "Upgrading from [old feature]" vs. "Power user tips." Then, track which paths lead to faster time-to-value.

5. Connecting to Product Data: The "Aha" Accelerator

Users don't really want to spend time learning how to do something; they're much more interested in what they can do.

Test showing actual product data vs. generic examples, highlighting ROI vs. features, and using outcome-focused copy vs. feature-focused copy. Your product adoption metrics will tell you exactly what resonates.

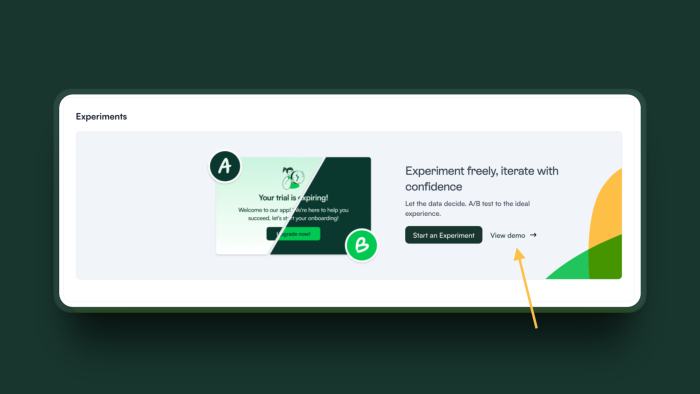

The Game Changer: Testing for Actual Feature Adoption (Not Just Views)

Here's where Chameleon Demos fundamentally shift how product teams should think about Demo effectiveness.

We're going to be able to set not just the completion of the Demo, but an event that could be an event in the product, such as them actually using the product.

This is massive for product teams. You're not measuring Demo performance by views or completion – you're measuring whether viewers hit your activation milestones.

Did they view the Demo and then complete the feature setup? Did they activate within 7 days? Did they become weekly active users of that feature? This is the data that matters for your product metrics.

The Product Journey Integration: Where and When Matters

Your Demo doesn't exist in isolation; it's part of your broader product experience. Smart product teams are testing not just Demo variations, but Demo placement within the user journey:

In empty states vs. help documentation: We've seen the same Demo drive 3x higher activation when embedded in empty states versus linked from help docs.

During onboarding vs. feature discovery: New users might need the comprehensive version, while existing users just need the delta. Test both.

Pre-activation vs. post-activation: Sometimes showing the value before setup drives commitment. Sometimes showing it after prevents overwhelming users. Test it.

Triggered by behavior vs. always visible: Users who've attempted a feature three times might be ready for education, while first-time visitors might not be. Test your triggers.

The same Demo can dramatically impact your adoption metrics depending on when and where it appears in the user journey.

The Compound Product Wins: How A/B Testing Rolls Into Massive Adoption Gains

Here's what happens when product teams commit to systematic Demo testing:

Month 1: You discover your monolithic onboarding Demo works better as feature-specific micro-Demos. Feature activation rate jumps 40%. Your activation funnel finally makes sense.

Month 2: You test adding use-case branches. Enterprise users self-select into advanced workflows while startups get the quick-win path. Time-to-value decreases by 3 days.

Month 3: You test connecting Demo views to in-app targeting. Users who viewed but didn't activate get contextual tooltips. Feature adoption increases by another 25%.

Quarter 2: Your tested, optimized Demo strategy drives 60% higher feature adoption than your original approach. You hit your quarterly activation OKRs for the first time.

This isn't theoretical. This happens when you treat Demos as part of your product experience, not just educational content.

The Chameleon Advantage: Ship Features Faster, Test Smarter

Remember the old way? Record multiple Demo versions, coordinate with design, wait for engineering to implement different variants, manually track performance?

We killed that process.

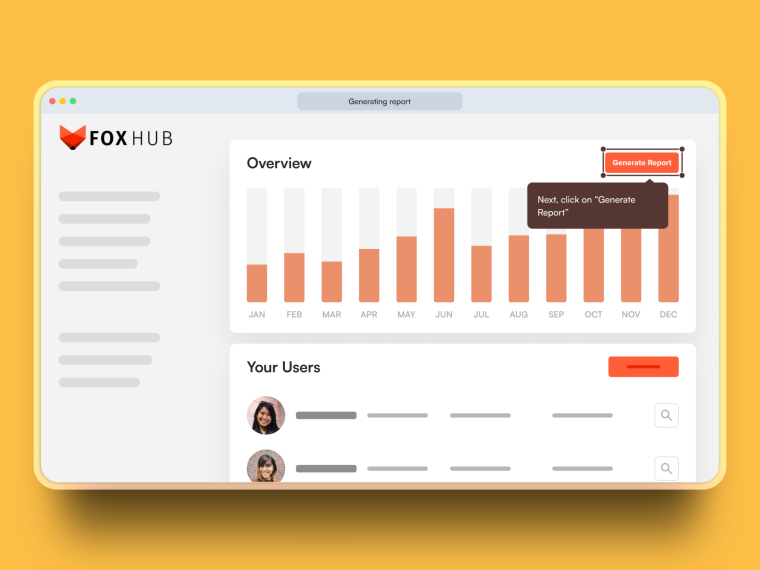

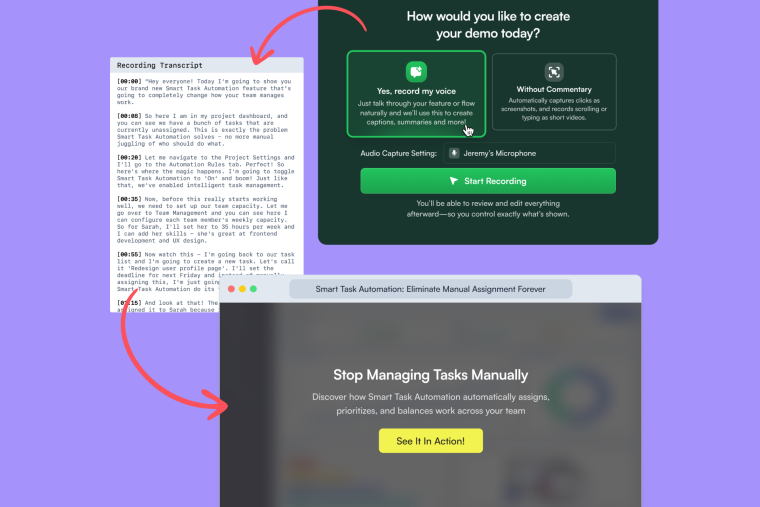

With Chameleon Demos, you explain your feature once. Talk through it like you're showing a colleague. Our AI generates everything – your Demo, your in-app announcements, your onboarding tooltips, and even your release notes.

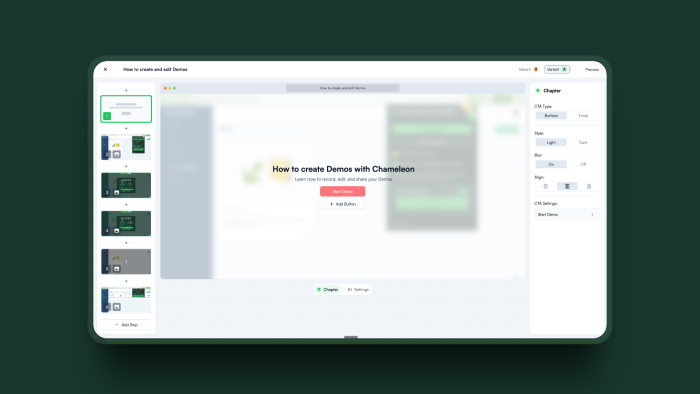

For A/B testing, creating a variant is one click. There is no re-recording, design resources, or engineering tickets. Just duplicate, modify, and test.

You can test different narratives for the same feature, technical depth vs. value focus, step-by-step vs. outcome-first, all from that single recording.

And because Chameleon Demos are explicitly built for product adoption, you can:

Track whether Demo viewers hit your activation events

Automatically segment them for follow-up experiences

Trigger in-app guides based on Demo engagement

Measure impact on your actual product metrics

This isn't about Demo metrics – it's about product metrics.

The Anti-Patterns: What Not to A/B Test

Quick reality check for product teams:

Don't test everything at once: Changing multiple variables means you won't know what moved your activation metrics. One test, one variable, clear results.

Don't test without meaningful traffic: If only 20 users see your Demo weekly, it will take months for significant results. Focus on driving discovery first.

Don't optimize for the wrong stage: Testing for new user onboarding when most viewers are existing users is pointless. Segment first, then test.

Don't test in isolation from your product metrics: Demo completion rate doesn't matter if it doesn't correlate with feature adoption. Always connect to downstream metrics.

Your Product Team's Next Move: From Launch and Hope to Launch and Know

The gap between product teams that guess and product teams that know their adoption drivers? It's literally one test away.

Here's your tactical playbook:

Pick your most important feature launch from last quarter

Check its current adoption rate (be honest)

Create a Demo variant with one change (title or length is a good start)

Run for two weeks, measuring actual feature activation, not Demo metrics

Apply learnings to your next feature launch

Compound these wins quarter over quarter

The Bottom Line for Product Teams

Every feature you ship represents weeks or months of work. User research, design, development, QA – massive investment. And then you explain it once, ship that explanation, and hope for the best?

That's insane.

The future of product adoption isn't about creating perfect Demos. It's about creating Demos that evolve based on actual user behavior and real adoption data.

Because here's the truth every PM and PMM knows: the best feature in the world is worthless if users don't understand its value. And you can't afford to guess at what makes that value click.

The only thing worse than low feature adoption? Knowing you could have fixed it with a straightforward test.

Ready to stop hoping and start knowing what drives adoption? Your first Demo A/B test is waiting.

Want to see how A/B testing Interactive Demos can improve your feature adoption metrics? Try Chameleon Demos free – built specifically for product teams who measure success by activation, not just awareness. Because shipping features without testing their story is so 2024.

FAQs on A/B Testing your Interactive Demos

-

A/B testing for Interactive Demos is the process of creating two or more variations of the same demo—changing elements like the title screen, length, CTA copy, or tone—and measuring which version drives better product adoption. Instead of guessing what works, you use real user behavior to decide.

-

Most teams ship a single demo and hope it works, but that often leads to high drop-off rates and missed adoption opportunities. Testing allows you to discover which variation actually converts viewers into active users, helping you avoid relying on assumptions.

-

The most impactful demo elements to test include: Title screen (first impression and value promise), Demo length (short vs. in-depth flows), CTA copy (e.g., “Start Free Trial” vs. “See My ROI”), Branching options (personalized demo paths), Voice and tone (formal vs. conversational styles)

-

There’s no universal rule—shorter isn’t always better. Four-step demos may work well for awareness-stage visitors, while eight-step deep dives might resonate with qualified prospects. The only way to know is by testing different demo lengths with your audience.

-

Avoid testing too many variables at once, running tests without enough traffic to reach significance, and optimizing for the wrong metric (like time watched instead of product activation). Always test one element at a time and align your goals with adoption outcomes.

-

Yes. Tools like Chameleon Demos use AI to generate annotations, create variations, and even produce supporting assets like email copy or help docs from a single recording. This makes launching and testing new demo versions significantly faster.